Consequence of sound

What Clubhouse tells us about the state of social audio moderation

Good Morning! A big hello to readers who signed up this week. This is The Signal’s weekend edition. Today, we look at why content on social audio platforms is difficult to moderate; not only because of its ephemeral nature but also because strict regulation doesn’t align with platforms’ priorities. Also in today’s edition: the best long reads curated for you. Happy weekend reading.

What happened on November 27 was the latest in a slew of harassments experienced by Akashi Kaul. But it had begun in February.

The academic and Clubhouse influencer—she has over 21,000 followers on the social audio app—participated in Clubhouse rooms that supported protests against the centre’s farm laws. One of those rooms happened to have Mo Dhaliwal, co-founder of non-profit Poetic Justice Foundation (PJF), and who was accused of promoting a separatist agenda.

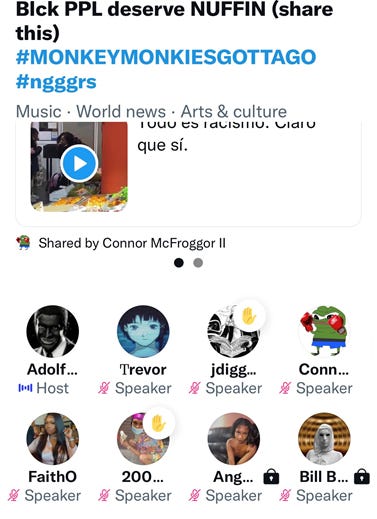

“That’s when a contingent of right-wingers came onto the app. There was verbal abuse and calls to attack me and my family,” Kaul remembers. “They started naming rooms after me. I spotted seven, including one called ‘Akashi R***i’.”

The vitriol escalated when the platform launched its Android app. India, where 95% of mobile users have the mobile operating system, became one of Clubhouse’s biggest markets.

Cue November 27, when a now-deleted room called ‘Singlepur’ began auctioning the bodies of Akashi Kaul and her friend Swaty Kumar, who was in the room as it happened.

Kaul and Kumar filed police complaints and appealed to the Clubhouse top brass, including co-founder Rohan Seth, for action. Nothing’s moved yet.

“Before this happened, my friends had flagged profiles that participated in the ‘Akashi R***i’ room. But I didn’t see any action,” Kaul says. “Instead, their profiles were report-bombed to the point of suspension.” ‘Report bombed’ alludes to mass reporting, where a group coordinates to falsely report an account to shut it down.

The ordeal faced by Kaul and Kumar is being reported as a Clubhouse problem. The platform, currently valued at $4 billion, is focused on growth-first features. Last month, it announced its localisation ambitions in India by making the app available in five languages. But it is yet to reveal any new tools or methods for content moderation. The Facebook papers have revealed how difficult and expensive it is to moderate non-English content even for a resource-rich company.

Concerns over Clubhouse’s lukewarm approach to moderating date to 2020 when it faced complaints of anti-Semitism, misogyny, and misinformation.

This isn’t just a Clubhouse story, however. It’s a story about the ephemerality of social audio and the absence of voice metadata—who said what and the time stamp—to ensure tighter moderator rules.

Tech companies are also reluctant to build in tough regulations that may hinder adoption. They'd rather shun accountability and compliance in favour of the growth Kool-Aid. A Washington Post story has revealed how Twitter did not hold back launching Clubhouse rival Spaces despite knowing fully well that it would have little control over conversations. The story shows how Spaces has become a platform for hate speech, bullying and misinformation campaigns.

Here today, gone today

Clubhouse was the place to be in April 2020. Securing an invite to be in the same room as venture capitalists and self-proclaimed pundits was the acme of pandemic networking chops. Its promise of ephemeral social audio was music to the ears of a world tethered to video. Audio (specifically podcasts) had increased listener engagement during the Covid-19 pandemic, but Clubhouse offered something more: equal participation, kinship, and impermanence in a fatigued world.

That promise became a bandwagon. Twitter launched Spaces, Discord introduced Stage Channels, Facebook gave us Live Audio, and Reddit piloted Talk. Each competed with the other in a gladiatorial show of features and monetisation tools.

Moderation, however, remained an afterthought.

Enter hiccup one: recording.

When they launched, social audio platforms did not have voice recording for investigations into community violations; perhaps because not all countries and states (in the US) allow one-party consent.

Clubhouse introduced in-app recording only in September. And even then, records are deleted as soon as a room ends. Discord, which has grappled with extremism on its servers and acquired artificial intelligence (AI) companies to curb online harassment, still doesn’t have an in-app recording feature. Twitter Spaces keeps recordings for 30-120 days depending on the violation (and subsequent appeals, if any). Facebook Live Audio and Reddit Talk are yet to have recording features.

All that said, recording is not a solution. The fluid nature of synchronous, community audio means that action can be taken only post-harassment. And unlike text and video, live audio does not operate on the prerequisite that there is something to take down. All AI machinations deployed by social media giants for moderation are obsolete in this context. No technology can preempt what someone will say when they ‘raise their hand’ in a room.

In 2019, computational social scientist Aaron Jiang wrote Moderation Challenges in Voice-based Online Communities on Discord, a white paper on the complexities of regulating social audio. While his paper focused on Discord, the conclusions apply to all voice platforms.

It’s a moderator’s world, and we’re all living in it

Enter hiccup two: the good faith principle.

The impermanence of social audio is why platforms operate on the good faith principle, or the assumption that moderators will uphold platform rules and guidelines. But this approach does not work either. As seen in the case of Akashi Kaul and Swaty Kumar, the ‘Singlepur’ moderator himself was in violation of Clubhouse community guidelines.

By playing the ‘platform, not publisher’ card, social audio apps not only leave the spring cleaning to moderators, but also leave it to the larger community to flag problematic content as it unfolds. Here’s why this doesn’t work either:

“I was in a room talking about [IPS officer] KPS Gill. At one point, a participant raised his hand just so he could come on stage and say things to me like ‘Nice b**bs’ and ‘No wonder your husband left you’,” Akashi Kaul says. “Despite my complaints, the moderator cited ‘civil discourse’ and did nothing. How was that even remotely civil?”

The question, then, is whether platforms are doing anything to prevent fires rather than relying on users to douse them. Moderating voice is an uphill climb now, but are they proactively solving for it? Do they have teams working on the evolution of the moderation playbook? What measures, AI or otherwise, are they deploying to ensure safer user experiences?

The Signal reached out to representatives from Clubhouse, Facebook, Twitter, and homegrown social audio app Leher with these queries. While Clubhouse and Facebook did not revert, a Twitter representative had this to say:

“Our product, support, and safety teams have been core to our work to ensure that the feature isn't used to amplify content that violates the Twitter Rules. We have tooling that allows us to review reports as they happen in real-time, and we’re working on policies to improve proactive enforcement. We're committed to better serving our Spaces hosts and listeners, and while Spaces is an iterative product, we’ll continue to learn, thoughtfully listen, and make improvements along the way.”

Twitter neither elaborated on “real-time” reviewing, nor did it explain what tools it is looking to use for “proactive enforcement’.

Meanwhile, Leher CEO and co-founder Vikas Malpani admits that moderation tools for social audio are in their nascency. But he insists this is de rigueur for any new mode of communication.

There’s no doubt that the future is conversation- and community-driven, he says. But any good that can come of it will rely on good tech and good behaviour reinforcement.

He cites the example of Leher, which has a Clubs feature. These Clubs or communities are a prerequisite to creating rooms on the app. This means Club owners control membership and content quality in their network.

“We’ve tried to structure behaviour this way. Additionally, less than 5% of Clubs and 10% of rooms are public despite the fact that Clubs can have public rooms.”

But keeping garbage away from prying eyes isn’t the same as taking out the trash. What happens when lines are crossed in private rooms?

“Users can block and report, and moderators can remove violators from rooms and Clubs. We rely on Club owners to stabilise behaviour, because you can’t moderate social audio in real-time. Not yet, anyway. We have AI to detect certain cuss words but that’s not foolproof, because AI doesn’t understand context. It shouldn’t get to a point where someone who says ‘shut the f**k up’ is booted when he or she says it jokingly,” Malpani adds.

“Social audio is like Web 1.0. It’s raw but evolving, and the tools to help regulate it in real-time will only come with advancements in AI, server capacities, and bandwidth,” he says.

Bending the rules

Enter hiccup three: compliance. This is what it all boils down to.

On December 9, digital rights advocacy group Internet Freedom Foundation (IFF) published its analysis of compliance reports filed by social media giants for the month of September. As per India’s Information Technology (Guidelines for Intermediaries and Digital Media Ethics Code) Rules, 2021, Significant Social Media Intermediaries (SSMIs)—or platforms with at least five million registered users—are required to file monthly compliance reports.

IFF’s findings reveal a lack of transparency about algorithms for real-time monitoring. This is a crucial point because while platforms say they use “tools” for proactive detection of violations, they do not specify how these tools work, or how effective they are in arresting problems as they unfold.

“Google, WhatsApp and Twitter, especially, have failed to [segregate] data by category of rule violated, by format of content at issue (e.g., text, audio, image, video, live stream), by source of flag (e.g., governments, trusted flaggers, users, different types of automated detection) and by locations of flaggers and impacted users (where apparent),” IFF notes.

As for Facebook, its proactive actioning for bullying and harassment stands at 48.7%.

The old guard’s (read: Google, Meta, and Twitter) ability to get away with noncompliance explains why social audio platforms were never overcautious. It’s a rerun of the proverbial story of putting the cart before the horse.

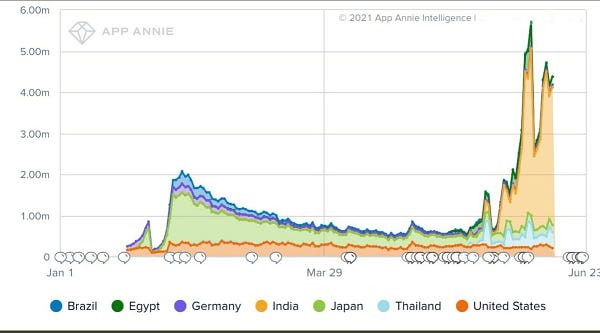

Clubhouse is yet to appoint a grievance and compliance officer for India, mandatory under SSMI guidelines for platforms with more than five million daily active users. It crossed the threshold in July 2021, as per App Annie data.

If five million is the number of daily active users, it’s a no-brainer that the total number of registered users is far more.

“Depending on the nature of harassment, complainants seek recourse under IPC Sections 354 (outraging a woman’s modesty), 506 (criminal intimidation), and Section 66E of the IT Act (violation of privacy), among others,” says Anandita Mishra, an associate litigation counsel at IFF.

“But electronic records are secondary evidence and need to be certified under Section 65B(4) of the Indian Evidence Act.”

One needs a raft of authentications to make electronic evidence admissible in court. The ephemerality of social audio and apathy by SSMIs could make matters arduous for people like Akashi Kaul and Swaty Kumar.

“Unless India passes a Data Protection Act, there's no onus on companies to be accountable for data privacy, admissibility, and user safety. This is imperative for fast-tracking innovations in social audio moderation too,” Mishra adds.

As it stands now, Clubhouse’s star is fading. App downloads have tanked, but company spokespeople claim that in-app engagement has increased. The startup recently introduced an ‘NSFW toggle’ to help users filter out explicit rooms.

It will do nothing to keep user, moderator, and room behaviour in check.

ICYMI

Elusive Attacker: Weeks after Paris Saint-Germain midfielder Kheira Hamraoui was attacked, the club is in turmoil. PSG’s Aminata Diallo was questioned even as Hamraoui has named several other suspects. This story in The New York Times points out how the case is stuck in limbo even as accusations of rivalry, extramarital affairs fly thick and fast.

The Costliest Lie: Former journalist Stephen Glass’ fabricated stories cost him his media career. But this is not a story about his media job. Amidst the countless apologies, court cases and inability to get an attorney licence, Glass also faced the biggest personal tragedy when his wife Julie Hilden developed Alzheimer's.

Millions Dumped: What if you accidentally threw $6 million in the garbage dump? Well, that’s exactly what James Howells did. Howells mined 8,000 bitcoins and stored it in a hard drive which was mistakenly thrown away. What follows is a story about a Welshman's unrelenting attempts to convince the local authorities to excavate the landfill, even as he battled marital troubles caused by his error. But, Howells has no plans to give up yet.

Setting Standards: Not market forces, but Amazon is now setting benchmarks for wage and benefits in the United States. Across industries, it has now been noticed that the e-commerce giant is slowly driving wage and benefit changes for low-skilled workers across industries. Starting wages at Amazon are now triple the federal mandate and other employers are also forced to follow suit.

Fake Birds? As ridiculous as it sounds, the ‘Birds Aren’t Real’ movement seems to be attracting followers. The reality, however, is that this isn’t a conspiracy theory group (aka QAnon), but an attempt by a few Gen Z volunteers to break the absurd misinformation campaigns on social media. Fighting lunacy with lunacy seems to be working well.

Musical Exploits: At first glance, Pulitzer-winning journalist Ian Urbina’s offer sounded like a gateway to a successful career in music. But once the details of his ‘project’ unraveled, it sounded more like a scam. Artists have now complained that Urbina’s Outlaw Ocean Project ate up a significant portion of their revenue and the promised collaborations with Spotify and Netflix never materialised. Urbina has now apologised even as the musicians stay baffled by his claims.

— By M Saraswathy

Want to advertise with us? We’d love to hear from you.

Write to us here for feedback on The Intersection.